Part One: Laying the Groundwork

In the world of DevOps, Continuous Integration (CI) and Continuous Deployment (CD) are essential practices that help teams deliver code changes more frequently and reliably. Azure and GitHub Actions are two powerful tools that can be used to implement these practices.

In this article, we'll explore using Terraform to set up a CI/CD pipeline with Azure and GitHub Actions. The combination of Azure and GitHub Actions emerges as a potent, flexible, and popular choice among developers and organizations. The synergy between Microsoft Azure and GitHub Actions provides a seamless experience for developing, deploying, and managing applications. However, like any technology, this combination has advantages and challenges that can influence the development and deployment journey.

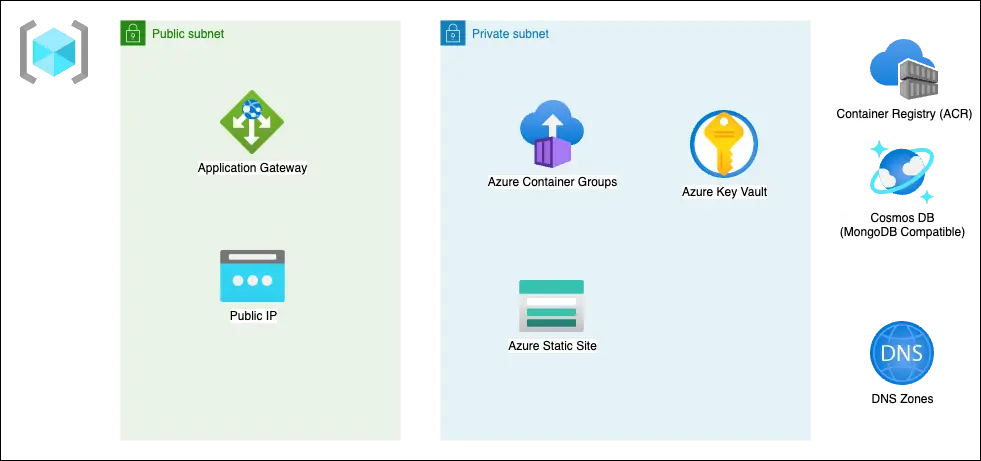

A Three-Tier App on Azure

Continuing the theme from the previous articles, we will be setting up a basic 3-tier containerized MERN (Mongo, Express, React, Node) application. In this implementation, we set up the API running inside Azure Container Groups backed by Azure CosmosDB. Azure Cosmos DB offers multi-model, globally distributed database services for large-scale applications, providing seamless and robust support for MongoDB APIs and enabling developers to utilize familiar MongoDB features and capabilities in a massively scalable and globally distributed environment. All secrets for the Application are stored in Azure Key Vault, which offers a secure and centralized service in Microsoft Azure for managing cryptographic keys, secrets, and certificates, ensuring developers and organizations can safeguard and control access to sensitive information with streamlined, policy-driven management.

Setting Up Azure Resources

As with the former articles in the series, this tutorial creates a simple to-do application and the accompanying CI / CD setup. You can skip to the end and see the entire script here.

Demo Prerequisites

- A Microsoft Azure Account with a default user that has administrative privileges.

- Azure CLI

- Terraform with the Azurerm provider setup

- A registered domain name setup using any domain registrar with administrative access to change the domain's nameservers.

First stage - Azure Groundwork.

This project will be divided into three stages. Create three working directories to represent each stage. Each stage will contain the following structure:

stage_name

- main.tf

- outputs.tf

- variables.tf

- versions.tf

- config.tfvarsmain.tf will contain the central provisioning, outputs.tf will have output values that will be used in subsequent stages, variables.tf will define the input variables for the stage and config.tfvars will collect sensitive information for use with the provisioning script and should never be committed to a version control system.

First, we will set the provider information in a file called versions.tf. This sets up the Azure providers for Terraform as well as sets versions of the providers this code was tested against.

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "=3.74.0"

}

}

}

provider "azurerm" {

features {}

}

provider "azuread" {}

Next, in a file called variables.tf, we set up the input variables for use with the first part of the script, including the Azure Region to use, an app "prefix" to use a standardized naming convention, the apex domain name of your choice (yourdomain.com) and a subdomain for that apex domain that will host the frontend.

variable "region" {

description = "Azure infrastructure region"

type = string

default = "East US"

}

variable "prefix" {

description = "Prefix for all resources"

type = string

}

variable "domain_name" {

description = "value of domain name"

type = string

}

variable "subdomain" {

description = "value of subdomain"

type = string

}

Define the variable values in a file called config.tfvars in the same folder as the first stage script.

domain_name = "YOURDOMAINHERE.COM" #your preregistered domain name

prefix = "todoapp" #any alphanumeric prefix you choose

subdomain = "www" #any subdomain you chooseNext, in main.tf (the main script of the 1st stage) we start setting up an App registration, giving us a client ID and secret. In the Azure environment, the client ID and client secret are pivotal for ensuring secure and authenticated communication between applications, as they function akin to a username and password, respectively, enabling the Azure AD (Active Directory) to validate and authorize access to resources while mitigating unauthorized or potentially malicious interactions. We also retrieve information about the configuration of the Azure provider, such as the subscription ID and tenant ID, facilitating the dynamic retrieval and management of these details without hardcoding them, thus enhancing the automation and security posture of infrastructure as code implementations within the Azure environment.

We also set up the main resource group which will contain all the resources created in all subsequent stages. Azure Resource Groups provide a method to manage and organize related Azure resources, enabling streamlined deployment, management, and monitoring while also assisting in applying consistent policy and access control across the grouped resources, thereby enhancing the administrative and operational efficiency in the Azure environment.

data "azurerm_client_config" "current" {}

# Create an Azure AD application

resource "azuread_application" "this" {

display_name = "TerraformAcmeDnsChallengeAppService"

}

# Create a service principal for the Azure AD application

resource "azuread_service_principal" "this" {

application_id = azuread_application.this.application_id

}

# Create a client secret for the Azure AD application

resource "azuread_application_password" "this" {

application_object_id = azuread_application.this.object_id

end_date_relative = "8760h" # Valid for 1 year

}

resource "azurerm_resource_group" "this" {

name = "${var.prefix}-resources"

location = var.region

}

Next, we set up the Azure Key Vault. Azure Key Vault is a cloud service provided by Microsoft Azure for securely storing and seamlessly managing cryptographic keys, secrets, and certificates used by cloud applications and services, ensuring centralized management of sensitive data with robust access control and audit history.

resource "azurerm_key_vault" "this" {

name = "${var.prefix}-kv"

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

tenant_id = data.azurerm_client_config.current.tenant_id

sku_name = "standard"

access_policy {

tenant_id = data.azurerm_client_config.current.tenant_id

object_id = data.azurerm_client_config.current.object_id

certificate_permissions = [

"Get", "List", "Delete", "Create", "Import", "Update", "ManageContacts", "GetIssuers", "ListIssuers", "SetIssuers", "DeleteIssuers", "ManageIssuers", "Recover", "Backup", "Restore", "Purge"

]

key_permissions = [

"Get", "List", "Delete", "Create", "Import", "Update", "Recover", "Backup", "Restore", "Purge"

]

secret_permissions = [

"Get", "List", "Delete", "Set", "Recover", "Backup", "Restore", "Purge"

]

}

}

resource "azurerm_key_vault_secret" "client_app_service" {

name = "client-secret"

value = azuread_application_password.this.value

key_vault_id = azurerm_key_vault.this.id

}

resource "azurerm_key_vault_secret" "client_id" {

name = "client-id"

value = azuread_application.this.application_id

key_vault_id = azurerm_key_vault.this.id

}

# Assign a role to the service principal

resource "azurerm_role_assignment" "example" {

scope = "/subscriptions/${data.azurerm_client_config.current.subscription_id}"

role_definition_name = "Contributor"

principal_id = azuread_service_principal.this.object_id

}

resource "azurerm_user_assigned_identity" "this" {

name = "${var.prefix}-identity"

resource_group_name = azurerm_resource_group.this.name

location = azurerm_resource_group.this.location

}

resource "azurerm_key_vault_access_policy" "this" {

key_vault_id = azurerm_key_vault.this.id

tenant_id = azurerm_user_assigned_identity.this.tenant_id

object_id = azurerm_user_assigned_identity.this.principal_id

secret_permissions = ["Get"]

}

Let's break it down:

Key Vault Configuration (azurerm_key_vault):

- A resource block creates and configures an Azure Key Vault instance.

- It utilizes variables (

var.prefix) and data from the Azure Resource Group (azurerm_resource_group.this.name/location) for its configuration. - The tenant ID is retrieved dynamically using

data.azurerm_client_config.current.tenant_id. - The

access_policyblock defines what actions are permissible concerning certificates, keys, and secrets within the Key Vault, and it specifies that these permissions apply to the current Azure tenant and object (potentially a service principal or user) executing the Terraform code.

Secrets Configuration (azurerm_key_vault_secret):

- Two secret resources are being configured within the previously defined Key Vault (

azurerm_key_vault.this.id). - The first secret, "client-secret," stores a password value associated with an Azure AD application. In contrast, the second secret, called "client-id," holds the Application (client) ID of an Azure AD application. The values of these secrets are retrieved from other resources

azuread_application_password.this.valueandazuread_application.this.application_id, respectively.

Role Assignment (azurerm_role_assignment):

- This block is used to assign the "Contributor" role to a service principal within the scope of the subscription, permitting it to manage resources in the subscription. The subscription ID is dynamically retrieved using

data.azurerm_client_config.current.subscription_id, and the service principal's object ID is retrieved fromazuread_service_principal.this.object_id.

The code leverages Terraform to facilitate the secure management and storage of sensitive application-related credentials within Azure Key Vault while dynamically retrieving configuration parameters and managing role-based access controls to secure and streamline resource management in the Azure environment.

After key vault creation, we set up the virtual network that the Application will use. This code orchestrates the deployment and configuration of several networking resources within the Azure environment:

resource "azurerm_virtual_network" "this" {

name = "${var.prefix}-network"

resource_group_name = azurerm_resource_group.this.name

location = azurerm_resource_group.this.location

address_space = ["10.0.0.0/16"]

}

resource "azurerm_subnet" "public" {

name = "public-subnet"

resource_group_name = azurerm_resource_group.this.name

virtual_network_name = azurerm_virtual_network.this.name

address_prefixes = ["10.0.1.0/24"]

}

resource "azurerm_subnet" "private" {

name = "private-subnet"

resource_group_name = azurerm_resource_group.this.name

virtual_network_name = azurerm_virtual_network.this.name

address_prefixes = ["10.0.2.0/24"]

delegation {

name = "containerinstance"

service_delegation {

name = "Microsoft.ContainerInstance/containerGroups"

actions = ["Microsoft.Network/virtualNetworks/subnets/join/action", "Microsoft.Network/virtualNetworks/subnets/prepareNetworkPolicies/action"]

}

}

}

resource "azurerm_network_security_group" "this" {

name = "${var.prefix}-nsg"

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

security_rule {

name = "allow_appgateway_to_aci"

priority = 1002

direction = "Inbound"

access = "Allow"

protocol = "Tcp"

source_port_range = "*"

destination_port_range = "443"

source_address_prefix = "*"

destination_address_prefix = "*"

}

security_rule {

name = "allow_appgateway_v2_ports"

priority = 1001

direction = "Inbound"

access = "Allow"

protocol = "Tcp"

source_port_range = "*"

destination_port_range = "65200-65535"

source_address_prefix = "*"

destination_address_prefix = "*"

}

security_rule {

name = "allow_all_outbound"

priority = 1003

direction = "Outbound"

access = "Allow"

protocol = "*"

source_port_range = "*"

destination_port_range = "*"

source_address_prefix = "*"

destination_address_prefix = "*"

}

}

# Associate NSG with public subnet

resource "azurerm_subnet_network_security_group_association" "public" {

subnet_id = azurerm_subnet.public.id

network_security_group_id = azurerm_network_security_group.this.id

}

# Associate NSG with private subnet

resource "azurerm_subnet_network_security_group_association" "private" {

subnet_id = azurerm_subnet.private.id

network_security_group_id = azurerm_network_security_group.this.id

}

resource "azurerm_public_ip" "this" {

name = "${var.prefix}-publicip"

location = azurerm_resource_group.this.location

resource_group_name = azurerm_resource_group.this.name

allocation_method = "Static"

sku = "Standard"

}

Virtual Network (azurerm_virtual_network):

- Creates a virtual network named based on a variable prefix and specifies an IPv4 address space of "10.0.0.0/16".

- The

resource_group_nameandlocationare derived from the pre-existing Azure Resource Group resource.

Subnets (azurerm_subnet):

- Two subnets are defined within the aforementioned virtual network: a "public-subnet" with an address prefix of "10.0.1.0/24", and a "private-subnet" with "10.0.2.0/24".

- The "private-subnet" includes a delegation block to allow Azure Container Instance resources to be deployed into the subnet, specifying the required actions for managing network policies.

Network Security Group (azurerm_network_security_group):

- Establishes a network security group (NSG) that contains three security rules:

- "allow_appgateway_to_aci": Allows inbound TCP traffic on port 443 from any source.

- "allow_appgateway_v2_ports": Permits inbound TCP traffic from any source on a range of ports (65200-65535). These ports are required for subsequent Azure Application Gateway to communicate with the network.

- "allow_all_outbound": Allows all outbound traffic on all protocols and ports to any destination.

- The NSG name is constructed using a variable prefix and is associated with the same resource group as the virtual network.

Subnet-NSG Associations (azurerm_subnet_network_security_group_association):

- The NSG is associated with both the public and private subnets, enforcing the defined security rules on all resources within these subnets.

Public IP (azurerm_public_ip):

- A static, standard SKU public IP address is provisioned, named with the defined prefix, and associated with the aforementioned resource group and location. The term "SKU," which stands for Stock Keeping Unit, refers to a particular resource category or version with specific features, capabilities, and pricing. Regarding Public IP addresses in Azure, SKUs determine features like pricing, availability zones, and IP address assignment (Dynamic or Static).

In summary, the code effectively sets up a structured Azure network with well-defined access rules and address spaces, ensuring controlled access and communications between resources while preparing a private subnet for containerized service deployment with specific network delegations.

The last part of main.tf in stage one, we set up the container registry that will hold the image for the target application. The Azure Container Registry (ACR) is a managed Docker container registry service for storing and managing container images. We're creating a basic ACR with admin access enabled in this setup. Additionally, we define the DNS zone and records for the apex and an A record for the public IP.

resource "azurerm_dns_zone" "this" {

name = var.domain_name

resource_group_name = azurerm_resource_group.this.name

}

resource "azurerm_dns_a_record" "this" {

name = "@" # "@" denotes the root domain

zone_name = azurerm_dns_zone.this.name

resource_group_name = azurerm_resource_group.this.name

ttl = 300

records = [azurerm_public_ip.this.ip_address]

}

resource "azurerm_role_assignment" "dns_contributor" {

principal_id = data.azurerm_client_config.current.object_id

role_definition_name = "DNS Zone Contributor"

scope = azurerm_resource_group.this.id

}

# Azure Container Registry

resource "azurerm_container_registry" "this" {

name = "${var.prefix}acr"

resource_group_name = azurerm_resource_group.this.name

location = azurerm_resource_group.this.location

sku = "Basic"

admin_enabled = true

}

Lastly, we will create an outputs.tf file that structures the values produced once this script is run.

output "nameservers_list" {

value = [for ns in azurerm_dns_zone.this.name_servers : ns]

}

output "grouped_outputs" {

value = {

resource_group = azurerm_resource_group.this.name

key_vault = azurerm_key_vault.this.name

acr = azurerm_container_registry.this.name

network = {

name = azurerm_virtual_network.this.name

public_subnet = azurerm_subnet.public.name

private_subnet = azurerm_subnet.private.name

}

zone_name = azurerm_dns_zone.this.name

}

}

Upon compilation of the script, run a plan to be sure you know what you are about to create in Azure and check for any syntax errors:

terraform plan -var-file config.tfvars

Copy and paste the values that are produced upon running the first stage script:

terraform apply -var-file config.tfvars

If you are following along, at this point, take the name servers that are produced from this output and update your domain registrar with the 4 DNS providers listed. They will have the format (`ns-##` where ## is a two-digit number) in the output as follows (be sure to include the trailing periods).

nameservers_list = [

"ns1-##.azure-dns.com.",

"ns2-##.azure-dns.net.",

"ns3-##.azure-dns.org.",

"ns4-##.azure-dns.info.",

]Updating a domain registrar can take some time as DNS has propagated globally such that yourdomain.com will resolve to the Azure DNS servers above.

The first stage is complete!

This code lays the groundwork for a simple to-do app running in Azure using GitHub Actions. Proceed to part 2 for domain certificate setup.